Blog

ADHD & Digital Life

The AI Sloppening of our Dead Internet

The internet was already getting worse, now its getting useless.

Jack Hannaway

Focus Operations

Dec 7, 2025

There’s a vibe shift happening online.

Not a “people are slightly annoyed” shift. A full‑body recoil.

Because the internet is getting flooded with what John Oliver (correctly) named “AI slop”: cheap, professional‑looking, deeply weird synthetic content that shows up everywhere you scroll.

And at first it was funny. Then it was everywhere. Then it started replacing actual human stuff. And now people are basically writing essays like this one about how this is in fact not very good.

What even is “AI slop”?

A video of a dog giving a TED Talk

A picture of a politician angrily eating a hotdog

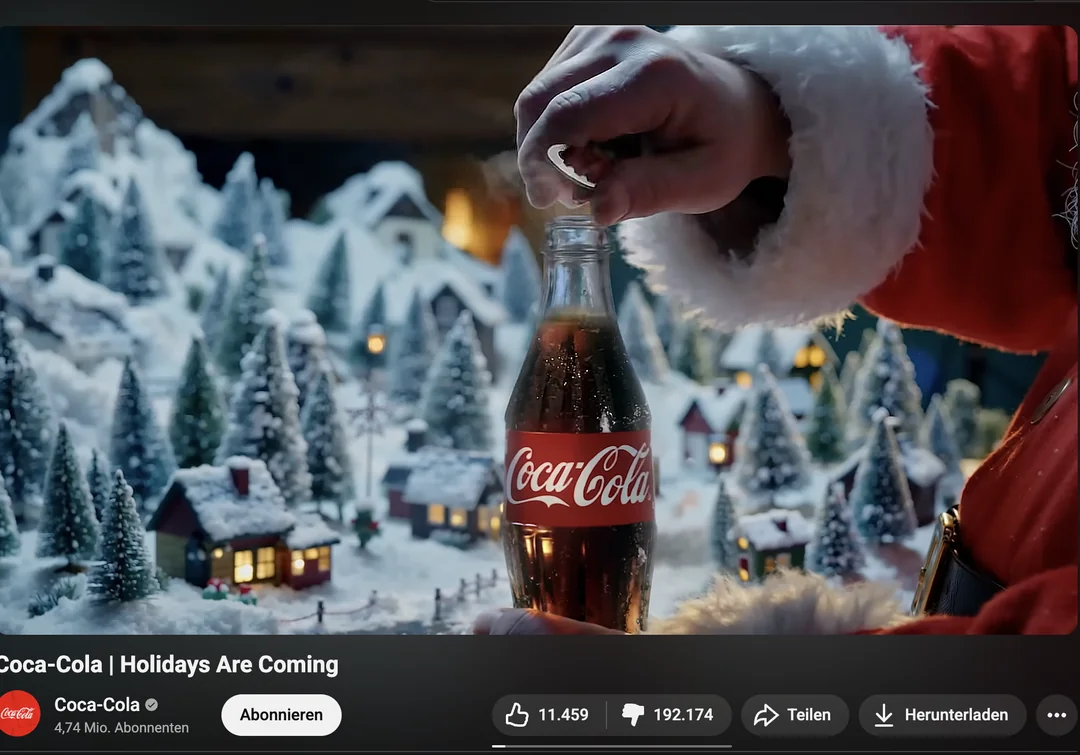

A warm, heartfelt Christmas ad loaded with jump cuts and CGI for Coca Cola that was made by an AI and then immediately mocked by consumers.

Yes. Thumbs down indeed.

John Oliver’s point was simple: generative tools made it absurdly easy to mass‑produce this stuff—then algorithms shove it into your feed because it performs like spam performs: high volume, high engagement bait, low cost.

And once you notice it, you can’t unsee it. Like when you learn what a “content farm” is, and suddenly realize your entire childhood was written by a guy duct‑taped to a keyboard in an SEO basement.

The “Dead Internet” theory is having a moment (for depressing reasons)

The Dead Internet Theory started as a fringe conspiracy idea that “the internet is mostly bots talking to bots.” It’s not supported in its grand, coordinated‑plot version… but the observable parts: automation, synthetic content, engagement farming… are now showing up so often that even serious outlets are talking about it.

And the stats don’t exactly help the internet beat the “zombie web” allegations:

Imperva’s Bad Bot reporting describes almost 50% of internet traffic as non‑human, with “bad bots” making up nearly one‑third of all traffic.

Reporting tied to Imperva data put bot traffic at 49.6% and notes some countries are far worse (Ireland cited at 71%).

TIME reported bots hitting 51% of traffic in 2024, i.e. more bots than humans, and connects that to the economics of platforms and “slop.”

So when people say “the internet feels empty,” they don’t necessarily mean literally nobody’s here. They mean: it’s getting harder to find real people under the pile of synthetic landfill.

Why it’s getting worse: incentives, baby

This is not a mystery. It’s capitalism with a Wi‑Fi connection. Slop wins because:

It’s cheap to produce at massive scale

It’s fast to test (post 100 versions, see what sticks)

It’s algorithmically rewarded (engagement is engagement, even if it’s someone commenting “AMEN 🙏” under Shrimp Jesus)

Nieman Lab documented this exact phenomenon: AI‑generated images becoming a new form of social spam (including “Shrimp Jesus” and similar weirdness) designed to farm engagement.

Here UNSW breaks down the cycle: AI content farms engagement; bots may amplify it; it becomes self‑propelling artificial “reality.”

So yeah: the internet is starting to look like its dominated by slop and ai generated content over human content.

Backlash is growing, and it’s getting specific

At first, the backlash was mostly creators. Now it’s gone mainstream, because people keep getting served AI stuff in places where they expect human craft. In addition, people are actually not too pleased about being tricked into believing something is real when it is not.

Example: The McDonald’s “AI Christmas ad” fiasco

McDonald’s Netherlands dropped an AI‑generated Christmas commercial and quickly got roasted for being “creepy,” “soulless,” and generally giving off “holiday spirit, but make it uncanny valley.” They pulled it after the backlash.

This is the new consumer line in the sand: stop making artwork without actual art.

Example: AI art backlash in public spaces

Even curated contexts are catching heat: SFO had backlash after accusations about AI‑generated artwork in an exhibition, showing how sensitive people have become to where AI is used and how transparent it is.

Example: Platforms scrambling to clean up the mess

Meta explicitly acknowledged “too much spammy content” crowding out authentic creators and announced feed changes and enforcement against accounts gaming distribution and monetization.

TechCrunch covered Meta lowering reach and monetization for spammy behavior—part of the broader effort to keep feeds from turning into sludge.

Example: Regulators stepping in

South Korea is moving toward requiring AI‑generated ads to be labeled starting in early 2026, driven by a surge in deceptive ads using fabricated experts and deepfakes.

That’s the shift: it’s no longer “lol AI is weird.”

It’s “this is degrading trust, creativity, and consumer safety—please stop.”

The funniest part is also the scariest part: “reality erosion”

Oliver’s take (and it’s hard to argue) is that the danger isn’t only that people will be fooled by fake media.

It’s that the existence of convincing fakes lets bad actors dismiss real footage as fake, too—a corrosive effect on shared reality.

This is how you get a world where:

Authentic video = “AI”

Fake video = “seems real to me”

Everyone argues forever

Nothing matters

Subscribe for part two

AI Slop Bingo

If you want a quick diagnostic tool for “am I looking at slop,” here’s an extremely scientific checklist:

✅ Overly emotional headline (“You won’t BELIEVE what happened…”)

✅ Weird hands (the traditional fingerprint of synthetic nonsense)

✅ Captions unrelated to the image (dog photo + airplane facts energy)

✅ “Experts say” with zero experts

✅ Comments full of bots and confused humans

✅ A strangely smooth, corporate‑friendly vibe that feels like it was written by a smiley HR stapler

If you hit 4+ checkmarks, congratulations: you’re not browsing the internet. You're consuming slop.

Not all AI is slop (but slop is what the incentives produce)

Let’s be clear: AI can be genuinely useful.

But when the business model is “post infinite cheap content until something hits,” you don’t get Shakespeare.

You get:

Synthetic junk

Stolen styles

Engagement bait

A feed that feels like it was designed by a slot machine with a humanities minor

And that’s why the backlash is growing. People aren’t “anti‑AI” so much as they’re anti‑being‑force‑fed garbage.

The punchline

The internet is not dead.

But it is increasingly haunted by:

bots

spam

synthetic content farms

and a thousand identical posts that all read like “As an AI language model, I too enjoy human feelings.”

And if platforms don’t fix the incentives, we’re headed toward a future where the most authentic thing you see all day is a 404 error page.

Which, honestly, would be kind of refreshing.

Jack Hannaway

Focus Operations

Share